AI’s Impact on Cyber Risks

Ever since AI first became more available just over a year ago, it’s been on the tip of everyone’s tongue. However, I don’t hear very many people stopping to think about what risks exist and I want to add a bit of a unique take on it.

This post won’t get into the cool new ways you can use AI to trim out tedious tasks or how it could impact the future of various jobs. Instead, with that potential in the back of your mind, let’s talk a little about what this means as a risk to our business.

I’ll keep this high level and help give you some ways to think about the role AI could be playing in your business (or personal use).

AI Isn’t New in Cybersecurity

While ChatGPT and access to OpenAI as a development tool is relatively new, and other forms of Large Language Models (our current, popular form of AI) continue to be built, it’s not necessarily new to the cyber world.

You may not realize it, but you’ve likely had AI helping in some form or another to help thwart attacks.

AI and some of the ability to recognize and analyze language and behaviors has been an underlying part of newer forms of defense in Next Gen Antivirus and Endpoint Detection and Response (EDR) solutions (to name a few areas that come to mind).

Prior to leveraging AI as a tool in this way, most Antivirus solutions only had the ability to identify known malware and viruses. Newer versions of these types of solutions add the ability to not just identify what is ‘known’ but also what seems suspicious. Instead of recognizing a specific code, it’s more based on heuristics – or how a program may ‘behave’. Even in EDR, activity that sticks out from the normal use of an endpoint to suggest that something needs to be investigated further.

With enhancements to AI and these resources, we can expect to see a lot of new cybersecurity tools on the market that help take this to a new level – ultimately either stopping an attack or at least detecting it and alerting security engineers more quickly.

Attackers, always trying to stay one step ahead of our defenses, have also been leveling up over the years and look to AI to help create more advanced methods of attacks and that have the potential to skirt by these leveled-up defenses.

This is all really more about where AI can be used as a defensive or offensive tool. That’s not where it’s necessarily showing up in our conversations today, however.

What About AI in Other Technology? Is There Risk?

The quick answer is: YES, there’s always risk! We just need to determine if it’s a benefit worth the risk and how we can mitigate the risks we accept – Risk Tolerance and Risk Mitigation.

One thing I can’t answer for you is “HOW will my AI tool be compromised?”. I’m not sure we really know the extent of “How” yet, there are some types of attacks we’re learning about and that I’ll mention – but that’s not the question to worry about (at least not for you and I).

My thought here is that this is still an evolving threat-scape. Even with other types of technology, sometimes we don’t know how an attack could occur until after people have started using it.

Researchers do what they can to come up with things before an attacker does, but it’s a constant battle. And threat actors are known for being extremely creative. The moment we think we know everything, a new attack (called a Zero Day Vulnerability when it’s newly discovered) could appear and we’re back at square one.

We’ll have to rely on researchers and be prepared to be reactive when new vulnerabilities become known. We’re also going to need to ask our vendors how they’re staying on top of these known vulnerabilities or discovering potential vulnerabilities before they become a problem.

I say all of this because, at our level, you and I won’t really be the ones to solve the “How” question.

So, what is the question we should ask instead?

“What’s at stake IF it’s attacked? How would it IMPACT us?”

This is what we can definitely start to think about and what we can begin to answer. To do so, look to the CIA of Cybersecurity as a way to think about how a compromise could impact our AI systems.

If you haven’t heard me talk about CIA in this sense before, it stands for Confidentiality, Integrity and Availability. Here’s how to think of it:

- Compromise of Confidentiality – information systems and data that need to remain confidential were exposed to someone that wasn’t authorized.

- Compromise of Integrity – information systems and data that we rely on to be accurate and make decisions cannot be trusted when compromised.

- Compromise of Availability – information systems and data we rely on as a function of business operations are not able to be accessed or used when we need it.

Now, more things can happen after an attack to further impact you, like cost of recovery or fines/fees related to regulations. However, the items above are things we can think about proactively and that directly attribute to the level of risk we may be taking.

With that all in mind, let’s look at how AI in other technologies could impact our business based on these measurements.

Compromise of Confidentiality

People are pretty excited to have AI help summarize and analyze data. Maybe it’s even helping to process data entry or how data flows between systems.

As these AI systems interact with data and systems that are confidential in nature, the AI models may have different means of how they store that information.

My biggest concern here is that AI doesn’t really forget. In fact, many models use your interactions to be able to improve the way the model works, and it effectively gets better as it learns.

If threat actors can either trick these systems into spitting out information that should be confidential, or if they can get into the backend database where this information could be stored, we have a problem.

My opinion here may ruffle some feathers, but when companies say that their systems are not storing this data or using it to improve their models – I don’t really buy it. They may think that, but I’m a little skeptical. In the last several years that we’ve all been reading about cyber-attacks on a regular basis, how often has there been a factor of over-confidence by those that were eventually compromised?

Afterall, people are already coming up with ways to trick AI into sharing things it’s not supposed to, often breaking the rules that are designed to keep the program in-line and protect sensitive info.

Check out how one person was able to get ChatGPT to generate Windows Keys (this is only one of several ways I’ve seen people trick it into doing this):

Again, we don’t know all the ways of “HOW” this could occur, the questions need to instead shift back to “IF” and what that means for us.

Questions to ask about a potential Compromise of Confidentiality:

- What data is this AI tool exposed to? (Both that it can access and that could be entered)

- Where will data reside? How is it protected at rest or in transit?

- Could the AI tool use information it has access to in combination with other data to create sensitive data? Maybe you only let it see partial information, could it piece together the rest of it? (Keep in mind that sensitive data may not just be about a person. It could be Intellectual Property or other forms of information that needs to stay protected.)

- Based on the data this AI tool has, if it were ever to be accessed by an unauthorized party, what laws could this trigger? (HIPAA, PCI, others?)

- Based on the data, how many individuals could this impact if compromised?

- Do we have a way to identify if data from this system was exposed?

Compromise of Integrity

This is one that I think is a high area of risk for AI-based systems.

Typically, when I think of Compromises of Integrity, I think about things like Wire Transfer Fraud where a routing number gets changed. Or maybe a Business Email Compromise where the compromised account is used to impersonate someone that others might trust to launch a second attack. Worse, what about a power plant that can’t trust their readings or misinformation could result in some form of physical harm.

For AI though, I wonder what happens when the job this AI tool was supposed to do was done wrong or if the information it gave someone was wrong?

We’ve already heard stories of this happening.

Okay, here’s one of ways “How” AI can be compromised: Prompt Injection attacks. Someone enters a code or carefully crafted prompt, and the AI starts to act abnormally.

Two recent situations where this was talked about:

- Who’s Harry Potter? Making LLMs forget from Microsoft.com

- Car Dealership Disturbed When Its AI Is Caught Offering Chevys for $1 Each from Yahoo.com

These were all for some fun or research, but it poses a bigger question for me. If these are the things that the ‘good guys’ figured out, what tricks do the ‘bad guys’ still have up their sleeves and what nefarious deeds could come from this kind of manipulation?

The point being, AI can be tricked and confused to go against what it’s programmed to do. Ultimately begging the question: What happens if I can’t trust what the AI is doing is accurate or correct?

So, here are questions to ask about a possible Compromise of Integrity:

- If the AI system has a Compromise of Integrity, what other systems could be impacted? (this will require some knowledge about where the AI sits in a flowchart of your information systems and data processes)

- If you’re using AI for creative purposes, how do you know you’re not using plagiarized work? What would happen if you did?

- Regarding how we use this AI-based system, what is the worst case if we can’t trust that it’s accurate? Does it impact customers? Could it put financials at risk?

- Is there a way to verify information to ensure it is accurate?

- How can we identify scenarios when there is a Compromise of Integrity and remediate the compromise quickly?

Compromise of Availability

AI is quickly filling in places in operational flow charts that are easy to delegate off. It’s helping businesses drive efficiencies and level up across the board.

What happens when these newly replaced processes stop working though?

A Compromise of Availability, like those seen with Ransomware or Distributed Denial of Service (DDoS) attacks, happens when systems that we rely on aren’t accessible or reliable. Sometimes to extort money to get them back or other times just to harm the business. Even a blackout from a natural disaster could cause this kind of situation to occur.

When you leverage AI as a critical tool, you now may find that you depend on it working.

Side note:

Only because a DDoS attack is similar to this, I’m throwing this other situation into the mix under this component of the CIA of Cybersecurity.But what about the transactional nature of AI tools? A DDoS attack overloads a system and causes it to crash – commonly this is leveraged toward an online service that someone wishes to disrupt.

However, not only could the AI system be taken down by overloading it, but it may also result in expensive transactions that rack up a major bill in the process.

The bottom line is that we’re putting a lot of these AI tools into processes that our business depends on, and we need to be thinking about what that exposure means.

Questions to ask about Compromises in Availability:

- If this system is not available, what else will break in our business processes or in other integrated technology?

- What specific processes are impacted by this? Who could this impact?

- Do we have an alternate plan for workarounds if this occurs?

- Will we know if this system is unavailable?

- How quickly can we restore availability?

Now What?

Look, I am right there with most of you on this. I see immense opportunity with AI and even have software ideas of my own that I plan to seriously pursue. But the risk associated with all of it is not something I’ll take lightly, and I advise you to take the same level of caution before going all-in on AI related tools.

As you consider using AI in your business, even if you have already gotten started, make sure you ask these questions that we covered above and dig deeper into just the generic lists I gave you.

Start to talk to your staff about both the benefits and risks related to AI. Make sure that you know what technology is getting used in your business and that the staff that uses these tools looks at the bigger picture. AI should be discussed in your regular Security Awareness Training plan to build an understanding of the threats it poses.

Follow best practices to reduce the likelihood of an attack. It may feel daunting to think about all of the ways AI might be attacked. Leave that work to the researchers though and just keep an eye on things you can do to minimize the likelihood of an attack by implementing the tried-and-true cybersecurity best practices. In other words, focus on your defensive tools: MFA, good passwords, minimize the data you put in, continue to educate yourself and track what information researchers are putting out there, etc. (If you don’t know what these are yet, let me know and I’ll point you to resources you can use.)

Focus on what you CAN control. Get an understanding of your level of risk based on the Impact (the CIA of Cybersecurity will guide you). Start to think about the questions I laid out above and what that means for your particular use of AI.

Don’t just trust, VERIFY! Make sure you continue to look at the security practices your providers are using. Ask them questions about how they’re staying ahead of attacks and about ways they may be able to minimize impacts if there’s a compromise of Confidentiality, Integrity, or Availability.

Get help from a cybersecurity consultant. I hope my posts and thoughts can help you take this to another level, but this is not a path I recommend going alone. Find help from someone you can trust that can be your guide on this cybersecurity journey.

Or, Let Me Help

Whether you’re just looking for help here in this post with your own risks, or even just want to be able to talk to your clients about risks like this without getting overly technical, reach out to me for ways we can collaborate!

Share

Ryan Smith

Ryan's experience across cybersecurity, sales, insurance, technology, education, and mathematics have helped him become a business-oriented problem solver that can simplify complex topics.

His eclectic and diverse background is now able to be leveraged by businesses that are interested in outside perspectives to help them overcome challenges.

Popular Posts

RLS Consulting Is (Re)Opening for Business!

Cybersecurity Begins at the Individual

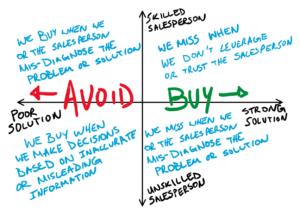

The Buyer’s Challenge

Newsletter

Looking for Identity Theft Protection?

RLS Consulting is a proud distributor of defend-id ©.

Learn how protecting your employees from the perils of Identity Theft and Fraud can help your business security and overhead costs.

Want to sell defend-id through your insurance agency?

Learn More